Introduction to Log File Analysis

What is a Log File?

A log file is akin to a detailed journal for computers, where every interaction between a user or entity and a system is recorded. These digital records can capture a wide array of actions performed within an operating system, application, or server, meticulously noting event sequences with timestamps. This repository of information is essential for various analytical purposes and troubleshooting.

The Role of Log Files Analysis in SEO

Log file analysis holds a pivotal role in enhancing Search Engine Optimization (SEO) as it offers a clear window into how search engines interact with your website. This process is an integral part of a technical SEO audit, unveiling the specifics of each crawler visit to fine-tune your site’s structure and content presentation for optimal indexing by Google and other search engines.

Key Components of Log Files

Log files consist mainly of entries documenting HTTP requests. Within these entries, you’ll find a wealth of data, though the core components include:

- URL path: The specific address of the requested resource within your website.

- Query string: Any additional data attached to the URL which might include search parameters or tracking codes.

- User-Agent: The software (usually a web browser or crawler) that initiated the request.

- IP Address: The unique numeric identifier assigned to the device which made the request, sometimes indicating its physical location.

- Timestamp: Precise date and time when the request was received by the server.

- Request type: Whether it was a GET (request to receive data) or POST (request to send data) request.

- HTTP Status Code: The response provided by the server, including success, redirection, client error, or server error indications.

Understanding these components is crucial for an effective analysis as they reveal not just visitor activity but also potential areas where the web experience may be enhanced.

Steps for Effective Log File Analysis

Step 1: Collecting Log Data

Collecting log data serves as the first and crucial step in log file analysis. It’s about aggregating all the log entries produced by your web servers, applications, and various network devices. While logs can be accessed manually, efficiency soars when utilizing automated tools that streamline the process. These tools can aid in continuously collecting, centralizing, and preparing log data for examination, often storing the information for later use—a necessity for a comprehensive and timely analysis.

Step 2: Parsing Log Files

Parsing log files is the process of decoding the raw log data into a structured format that is easier to understand and analyze. This step typically involves breaking down the lines in a log file into their constituent parts, like IP addresses, timestamps, and user agents, which allows you to filter and sort the data effectively. Effective parsing requires specialized software capable of handling the log file format your servers generate. Proper parsing allows for a detailed analysis and provides a foundation for actionable insights.

Step 3: Analyzing Logs

Once logs are parsed, analyzing them is where you truly start to delve into the data to uncover valuable insights. You might scrutinize for trends, anomalies or outliers that could signify performance issues or potential security threats. Analysis can also involve correlating different data types to answer specific questions, such as understanding user behavior patterns, gauging system health, or identifying the most visited pages on your site. This step often relies on sophisticated algorithms and can benefit greatly from machine learning techniques to spot less obvious patterns.

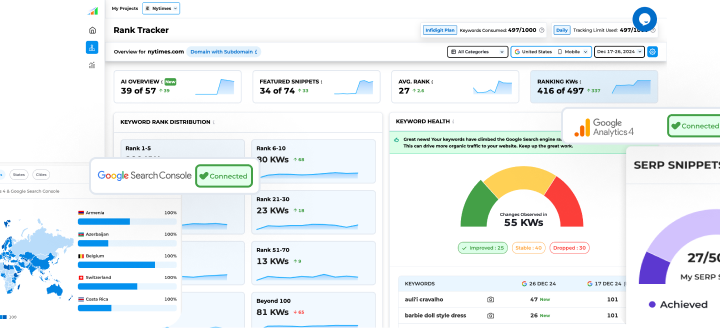

Step 4: Visualizing Data

Visualizing data brings a new dimension to log analysis, transforming complex datasets into graphics that are easier to digest. Well-designed dashboards play a pivotal role, showcasing key performance indicators and trends through charts, graphs, or heatmaps. You should aim for visualizations that are not only intuitive but tell a compelling story about the data. Color coding, for instance, can help highlight critical issues, and interactive elements allow for deeper exploration of the information presented.

Step 5: Taking Action

The final stride in log file analysis is taking action based on the insights gleaned. This involves making informed decisions to address detected issues, such as fixing server errors, optimizing for crawl efficiency, or enhancing website security. By setting benchmarks and monitoring the impact of the changes, you can validate the effectiveness of your actions. Regular review and adaptation are essential, as the dynamic nature of websites and user behavior means log file analysis should be an iterative process.

The Insights You Can Gain

Understanding Crawl Behavior and Frequency

Understanding crawl behavior and frequency illuminates how search engines like Google discover and revisit your web pages. By analyzing logs, you can discern the pattern and frequency with which search engine bots crawl your site. This understanding can reveal which pages are being frequently crawled and signal their importance to search engines. Conversely, identifying pages with low crawl rates may indicate issues with accessibility, content quality, or lack of internal linking, prompting the need for SEO adjustments.

Spotlighting Indexability Issues and Redirect Flaws

Spotlighting indexability issues and redirect flaws helps to ensure that search engines can efficiently index your website. Log file analysis can reveal if crawlers are encountering challenges such as broken links, errors, or improper redirects, including issues with trailing slashes, which can affect your site’s SEO performance. For instance, too many temporary (302) instead of permanent (301) redirects might waste the crawl budget or dilute page rank. Identifying such issues allows you to make necessary adjustments, such as fixing redirect chains or removing non-indexable pages with trailing slashes from sitemaps, to improve your site’s search engine visibility.

The Impact of Log File Analysis on SEO Optimization

Enhancing Site Performance and User Experience

Enhancing site performance and user experience is directly linked to your website’s success in search rankings and visitor satisfaction. By analyzing log files, you can pinpoint slow-loading pages, frequently occurring server errors, and problematic scripts that might be hindering your site’s performance. Addressing these issues not only bolsters your SEO efforts by making your site more crawl-friendly but also ensures that visitors have a seamless and enjoyable experience, increasing the likelihood of conversions and return visits.

Fine-Tuning Your Crawl Budget for Maximum Efficiency

Fine-tuning your crawl budget for maximum efficiency ensures that search engine bots spend their time wisely on your site, focusing on content that matters. Through log file analysis, you can identify and eliminate waste—such as non-essential pages being crawled—to direct the crawl budget toward high-value areas. This includes optimizing your site’s architecture, improving link equity distribution, and ensuring that updated or new content is discovered promptly. By managing your crawl budget effectively, you heighten the potential for your most important pages to rank well.

Overcoming Common Challenges in Log File Analysis

Dealing with Large Data Sets

Dealing with large data sets in log file analysis can be challenging due to the sheer volume and complexity of the data involved. Using robust data management techniques like data compression, employing databases optimized for log management, and leveraging Big Data analytics tools can help. It’s also beneficial to adopt a selective logging approach, capturing only the most relevant information to reduce data volume without compromising on insight quality. By streamlining data handling, you ensure timely and efficient log analysis suited for your SEO needs.

Addressing the Limitations of Indexing and Classification

Addressing the limitations of indexing and classification becomes imperative when you depend on log data for real-time insights. Indexing, while useful for organizing data, can introduce latency issues, as new data takes time to be indexed and searchable. Moreover, if the data isn’t indexed properly, it may not be accessible when needed. Free-text search is a valuable feature that enables you to bypass these limitations, allowing for searches across any log data field, ensuring that no critical information is left unexamined. Efficient tagging and classification can further streamline the analysis process by grouping related events, enhancing the speed and precision of diagnosing issues.

Practical Applications of Log File Analysis

Discovering Uncrawled and Orphan Pages

Discovering uncrawled and orphan pages through log file analysis is akin to finding hidden treasure in the realm of SEO. These pages exist without internal links, effectively isolating them from your site structure and making them invisible to crawling bots. By identifying these pages in your log files, you can reintegrate valuable content back into your site, giving it the visibility and attention it deserves. This might involve updating content, improving link structure, or creating new entry points to ensure these pages are included in the search engine’s crawl and indexation process.

Developing a Tactical SEO Strategy Based on Solid Data

Developing a tactical SEO strategy based on solid data extracted from log file analysis ensures decisions are driven by reality rather than conjecture. This approach allows you to allocate resources wisely, prioritize site optimizations, and even predict future website behavior patterns. A data-driven strategy might involve adjusting your content calendar based on crawl frequency, fixing technical SEO issues that impede bot access, or restructuring your website for better navigation and link equity flow. Armed with specific insights, you can craft an SEO roadmap that aligns perfectly with your site’s unique digital footprint.

Tools for Log File Analysis

Log file analysis is critical for understanding system performance, identifying errors, and optimizing SEO strategies. Below is an overview of some popular tools and a step-by-step guide on how to use them effectively.

1. Splunk

Overview:

Splunk indexes and searches log data from any source—applications, systems, or devices—providing actionable operational intelligence.

Features:

- Powerful search capabilities with real-time visibility.

- Intuitive dashboards for data visualization.

Steps to Use Splunk:

- Setup and Data Ingestion:

- Install Splunk on your server or use Splunk Cloud.

- Add data by selecting the log file source on the Splunk platform.

- Searching and Analysis:

- Use Splunk’s search feature to filter log data and extract insights.

- Utilize the ‘Search & Reporting’ app for in-depth analysis.

- Visualization and Monitoring:

- Create dashboards with graphs to monitor log data.

- Set up alerts for anomalies or critical events.

2. Logz.io

Overview:

Logz.io is built on the open-source ELK Stack (Elasticsearch, Logstash, Kibana) and specializes in cloud monitoring and data optimization.

Features:

- Machine learning-based alerts.

- Built-in data optimization for efficient storage.

Steps to Use Logz.io:

- Integration and Log Shipment:

- Register and set up Logz.io.

- Configure log shipment methods such as Beats or Syslog.

- Log Management:

- Use the Discover feature to explore log data and apply filters.

- Access Kibana for creating custom dashboards and visualizations.

- Advanced Features:

- Leverage AI-powered insights for detecting issues or understanding user behavior.

- Set up alerts for critical thresholds or events.

3. Screaming Frog Log File Analyser

Overview:

This tool is tailored for SEO professionals to analyze search engine bot activity.

Features:

- Identifies which URLs are being crawled, frequency, and any crawl errors.

Steps to Use Screaming Frog:

- Setup:

- Purchase a license and download the application.

- Load your log files into the tool for analysis.

- Log Analysis:

- Filter and explore data such as status codes and bot hit frequency.

- Export Insights:

- Export actionable insights to guide your SEO strategy.

Short Step Guide for Log File Analysis

To perform log file analysis, follow these steps:

- Enable Logging: Ensure logging is active on the server side for the required infrastructure components.

- Choose a Tool: Select a tool that fits your analysis needs (e.g., Splunk, Logz.io, Screaming Frog).

- Analyze Logs:

- Load log files into the tool.

- Apply filters and search queries to extract relevant data.

- Visualize Data: Create dashboards and reports for better interpretation of patterns and anomalies.

- Take Action: Use insights to address errors, monitor performance, or improve SEO strategies.

FAQs

What Exactly Is Log File Analysis?

Log file analysis is the meticulous examination of server logs to extract valuable data on how search engines interact with your website. It helps you understand the behavior of crawlers, identifying how often and which pages are being accessed, as well as spotting any errors or issues impacting SEO performance. This actionable intelligence can significantly influence and refine your SEO strategies.

How Often Should Log File Analysis Be Performed for SEO?

For SEO, log file analysis should be an ongoing process, as it’s crucial to adapt to the continuous evolution of your website and search engine algorithms. Ideally, performing this analysis monthly provides a good balance between being responsive to issues and being resource-efficient. However, for dynamic sites with frequent changes or significant traffic, a fortnightly or even weekly analysis might be more beneficial.

What types of log files should I analyze?

You should analyze server access logs, which include detailed records of all requests to your server. These contain valuable information on visitor interactions, bot activities, and can help in identifying patterns that affect your website’s SEO. Additionally, error logs should also be reviewed to troubleshoot and resolve issues that may negatively impact user experience and search engine rankings.

What tools can I use for log file analysis?

For log file analysis, you have tools such as Splunk for enterprise-level insights, Logz.io for cloud-based log management, and Screaming Frog Log File Analyser specifically designed for SEO professionals. Some SEO platforms integrate log file analysis to combine crawl data with log file insights for a more comprehensive overview.

What metrics should I focus on during analysis?

During log file analysis, focus on metrics such as crawl frequency, status codes (like 200 OK, 404 Not Found), and crawl requests, response times, and the volume of data transferred. Also, pay attention to the most and least crawled URLs, the distribution of crawls over your site’s content, and any crawl budget waste due to redirection errors or crawling non-essential pages.

Popular Searches

How useful was this post?

0 / 5. 0